0 Volumes

No volumes are associated with this topic

Academia (2)

continued.

Nanoparticles: The Dwarfs are Coming

|

| Professor Shyamelendu Bose |

Professor Shyamelendu Bose of Drexel University recently addressed the Right Angle Club of Philadelphia about the astounding changes which take place when particles are made small enough, a new scientific field called nanotechnology. In one sense, the word "nano" comes from the Latin and Greek for "dwarf". In a modern scientific sense, the nano prefix indicates a billionth of something, as in a nanometer, which is a billionth of a meter. Or nanotubes, or nano calcium, or nano-anything you please. A nanometer is likely to be the dominant reference because it is around this width that particles begin to act strangely.

|

| Nanoparticles |

At this width, normally opaque copper particles become transparent, the cloth becomes stain-resistant, and bacteria begin to emit clothing odor. Because the retina is peculiarly sensitive to this wavelength, colors assume an unusual brilliance, as in the colors of a peacock's tail. The stable aluminum powder becomes combustible. Normally insoluble substances such as gold become soluble at this size, malleable metals become tough and dent-proof, and straight particles assume a curved shape. Damascus steel is unusually strong because of the induction of nanotubes of nanometer width, and the brilliance of ancient stain-glass colors is apparently created by repeated grinding of the colored particles.

|

| Richard Feynman of Cal Tech |

Practical exploitation of these properties has almost instantly transformed older technologies and suggests the underlying explanation for others. International trade in materials made with nanotechnology has grown from a few billion dollars a year to $2.6 trillion in a decade, particularly through remaking common articles of clothing which were easily bent or soiled, into those which are stain and water resistant. Scientists with an interest in computer chips almost immediately seized upon the idea, since many more transistors can be packed together in more powerful arrangements. Richard Feynman of Cal Tech seems to be acknowledged as the main leader of this whole astounding field, which promises to devise new methods of drug delivery to disease sites through rolling metal nanosheets into nanotubes, then filling the tubes with a drug for delivery to formerly unreachable sites. Or making nanowires into various shapes for the creation of nano prostheses.

|

| Cal Tech on Los Angeles |

And on, and on. At the moment, the limitations of this field are the limitations only of imagination about what to do with it. For some reason, carbon is unusually subject to modification by nanotechnology. It brings to mind that the whole field of "organic" chemistry is based on the uniquenesses of the carbon atom, suggesting the two properties are the same or closely related. For a city with such a concentration of the chemical industry as Philadelphia has, it is especially exciting to contemplate the possibilities. And heartening to see Drexel take the lead in it. There has long been a concern that Drexel's emphasis on helping poor boys rise in the social scale has diverted its attention from helping the surrounding neighborhoods exploit the practical advances of science. The impact of Cal Tech on Los Angeles, or M.I.T on Boston, Carnegie Mellon on Pittsburgh, and the science triangle of Durham on North Carolina seems absent or attenuated in Philadelphia. We once let the whole computer industry get away from us by our lawyers diverting us into the patent-infringement industry, and that sad story has a hundred other parallels in Philadelphia industrial history. Let's see Drexel go for the gold cup in this one -- forget about basketball, please.

Evo-Devo

|

| The Franklin Institute of Philadelphia |

THE oldest annual awards for scientific achievement, probably the oldest in the world, are the gold medals awarded by the Franklin Institute of Philadelphia for the past 188 years. Nobel Prizes are more famous because they give more money, but that in a way involves another Philadelphia neighborhood achievement, because Nobel's investment managers have run up their remarkable investment record while operating out of Wilmington, Delaware. In the past century, one unspoken goal of the Franklin Institute has been to select winners who will later win a Nobel Prize, the actual outcome more than a hundred times. That likelihood is one of the attractions of winning the Philadelphia prize, but in recent years some Nobel awards have acquired the reputation of being politicized particularly the Peace and Literature prizes. Insiders at the Franklin are positively fierce about avoiding that, so the Franklin Institute prizes have become known for recognizing talent, not merely fame.

|

| Dr. Jan Gordon, Dr. Sean Caroll, and Dr. Cliff Tabin |

Within Philadelphia, the annual awards ceremony is one of the four top social events. The building will seat 800, but reservations are normally all sold out before invitations are even put in the mail. A major source of this recent success is clear; in the past twenty-five years, the packed audience is a star in the crown of Dr. Janice Taylor Gordon. This year, she hurried back from a bird-watching trip in Cuba, just in time to busy herself in the ceremonies where she sponsored Dr. Sean Carroll of the Howard Hughes Foundation and the University of Wisconsin, for the Benjamin Franklin medal in the life sciences. Carroll's normal activities are split between awarding $80 million a year of Hughes money for improving pre-college education from offices in Bethesda, Maryland, and directing activities of the Department of Genetics in Madison, Wisconsin. His prize this year has relatively little to do with either job; it's for revolutionizing our way of thinking about evolution and its underlying question, of how genes control body development in the animal kingdom. Evolution and Development are academic terms, familiarly shortened to Evo-Devo.

|

| Dr. Carroll's Butterflies |

My former medical school classmate Joshua Lederberg, also a resident of Philadelphia, was responsible for modern genetics, demonstrating linkages between the DNA of a cell, and the production of a particular body protein. For a time it looked as though we might confidently say, one gene, one protein, explains most of the biochemistry. In the exuberance of the scientific community, it even looked as though deciphering the genetic code of 25,000 human genes might explain all inherited diseases, and maybe most diseases had an inherited component if you took note of inherited weaknesses and disease susceptibilities. But that turned out to be pretty over-optimistic. Lederberg got into an unfortunate scientific dispute with French scientists about bacterial mating and now appears to have been wrong about it. But the most severe blow to the one gene, one protein explanation of disease came after the NIH spent a billion dollars on a crash program to map out the entire human genetic code. Unfortunately, only a few rare diseases were explained that way, at most perhaps 2%. Furthermore, all the members of the animal kingdom turned out to have pretty much the same genes. Not just monkeys and apes, but sponges and worms were wildly different in outward appearance, but substantially carried the same genes. Even fossils seem to show this has been the case for fifty million years. Obviously, animal genes have slowly been added and slowly deleted over the ages, but in the main, the DNA (deoxynucleic acid) complex has remained about the same during modest changes in evolution. Quite obviously, understanding the genome required some major additions to the theory's logic.

In late 1996, three scientists met in Philadelphia for a brain-storming session. Sean Carroll had demonstrated that mutations and perhaps an evolution of the wing patterns of butterflies and insects took place during their embryonic development, and seemed to be controlled by the unexplored proteins between active genes. Perhaps, he conjectured, the same was true of the whole animal kingdom. Gathered in the Joseph Leidy laboratory of the University of Pennsylvania, the three scientists from different fields proposed writing a paper about the idea, suggesting how scientists might explore proving it in the difficult circumstances of nine-month human pregnancies, in dinosaurs, in deep-sea fish and many remote corners of the animal kingdom. Filling yellow legal pads with their notes, the paleontologist (fossil expert) Neal Shubin of the University of Pennsylvania, genetic biologist (Sean Carroll of Wisconsin), and Harvard geneticist Cliff Tabin worked out a scientific paper to describe what was known and what had to be learned. The central theme, suggested by Carroll, was that evolution probably takes place in the neighboring regulatory material which controls the stage of life early in the development of the fetus, and these regulatory proteins consist of otherwise inactive residual remnants of ancient animals from which the modern species had evolved. As the fetus unfolds from a fertilized egg into a little baby, its outward form resembles fish, reptiles, etc. And hence we get the little embryonic dance of ancient forms morphing into modern ones. In deference to the skeptics, it would have to be admitted that the physical resemblance to adult forms of the ancient animals is a trifle vague.

|

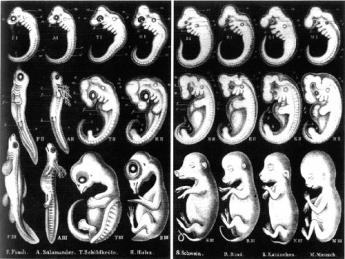

| Haeckel's drawings of vertebrate embryos, from 1874 |

Embryologists have long argued about Ernst Haeckel's incantation that "Ontology recapitulates phylogeny", which is a fancified way of saying approximately the same thing. Darwin's conclusion was more measured than Haeckel's and explanations of the phenomenon had been sidelined for almost two centuries. But it seems to be somewhat true that fossils which paleontologists have been discovering, going back to dinosaurs and beyond, do mimic the stages of embryonic development up to that particular level of evolution, and might even use the same genetic mechanism. The genetic mechanism might evolve, leaving a trail behind of how it got to its then-latest stage. Borrowing the "Cis" prefix ("neighboring") from chemistry, these genetic ghosts have become known as Cis-regulatory proteins as the evidence accumulates of their nature and composition. Growing from the original three scientists huddled in Shubin's Leidy Laboratories in 1996, academic laboratories by the many dozens soon branched out, dispatching paleontologists to collect fossils in the Arctic, post-doctoral trainees to collect weird little animals from the deserts of China, and the caves of Mexico, everywhere confirming Carroll's basic idea. Even certain forms of one-celled animals which occasionally form clumps of multi-celled animals have been examined and found to be responding to a protein produced by neighboring bacteria which induces them to clump -- quite possibly the way multi-cellular life began. Many forms of mutant fish were extracted from caves to study how and why they developed hereditary blindness; the patterns of markings on the wings of butterflies were puzzled over, and the markings on obscure hummingbirds. The kids in the laboratory are now having all the fun with foreign travel, while the original trio finds themselves spending a lot of time approving travel vouchers. Many fossils of animals long extinct have been studied for their evolution from one shape to another, especially in their necks, wings, and legs. Some pretty exciting new theories emerged, and are getting pretty well accepted in the scientific world. At times like this, it's lots of fun to be a scientist.

Wistar Institute, Spelled With an "A"

|

| Dr. Russel Kaufman |

The Right Angle Club was recently honored by hosting a speech by Dr. Russel Kaufman, the CEO of the Wistar Institute. Dr. Russel is a charming person, accustomed to talking on Public Broadcasting. But Russel with one "L"? How come? Well, sez Dr. Kaufman, that was my idea. "When I was a child, I asked my parents whether the word was pronounced any differently with one or two "Ls", and the answer was, No. So if I lived to a ripe old age, just think how much time and effort would be wasted by using that second "L". In eighty years, I might spend a whole week putting useless "Ls" on the end of Russel. I pestered my parents about it to the point where they just gave up and let me change my name". That's the kind of guy he is.

|

| The Wistar Institute |

The Wistar Institute is surrounded by the University of Pennsylvania, but officially has nothing to do with it. It owns its own land and buildings, has its own trustees and endowment, and goes its own academic way. That isn't the way you hear it from numerous Penn people, but since it was so stated publicly by its CEO, that has to be taken as the last word. It's going to be an important fact pretty soon since the Wistar Institute is soon going to embark on a major fund-raising campaign, designed to increase the number of laboratories from thirty to fifty. The Wistar performs basic research in the scientific underpinnings of medical advances, often making discoveries which lead to medical advances, but usually not engaging in direct clinical research itself. This is a very appealing approach for the many drug manufacturers in the Philadelphia region, since there can be many squabbles and changes about patents and copyrights when the commercial applications make an appearance. All of that can be minimized when fundamental research and applied research are undertaken sequentially. Philadelphia ought to remember better than it does, that it once lost the whole computer industry when the computer inventors and the institutions which supported them got into a hopeless tangle over who had the rights to what. The results in that historic case visibly annoyed the judge about the way the patent infringement industry seemingly interfered with the manufacture of the greatest invention of the Twentieth century.

Patents are a tricky issue, particularly since the medical profession has traditionally been violently opposed to allowing physicians to patent their discoveries, and for that matter, Dr. Benjamin Franklin never patented any of his many famous inventions. But the University of Wisconsin set things in a new direction with the patenting of Vitamin D, leading to a major funding stream for additional University of Wisconsin research. Ways can indeed be devised to serve the various ethical issues involved since "grub-staking" is an ancient and honorable American tradition, one which has rescued other far rougher industries from debilitating quarrels over intellectual property. You can easily see why the Wistar Institute badly needs a charming leader like Russel, to mediate the forward progress of our most important local activity. From these efforts in the past have emerged the Rabies and Measles vaccines, and the fundamental progress which made the polio vaccine possible.

It was a great relief to have it explained that there is essentially no difference at all between Wisters with an "E" and Wistars with an "A". There were two brothers who got tired of the constant confusion between them, see and agreed to spell their names differently. When the Wistar Institute gathered a couple of hundred members of the family for a dinner, the grand dame of the family declared in a menacing way that there is no difference in how they are pronounced, either. It's Wister, folks, no matter how it is spelled. Since not a soul at the dinner dared to challenge her, that's the way it's always going to be.

Revenue Stream for Historical Documents

|

| The Economist |

1.-2. Save the cost of publishing long bibliographies in every copy of a book whose readers mostly make no use of the bibliography, while still making the bibliography available to those who will use it. The Economist magazine now does this in the form of notifying the reader that the source documents for their articles are available on the Economist web site. This is a suitable methodology for publications with only limited bibliographies, but very large circulation and short shelf life. Essentially, it is a free service to readers which reduces the clutter and intimidation of citations to essentially unavailable sources.

|

| Kindle |

On the other hand, a recent book about Thomas Jefferson had 120 pages of bibliography citations. Unless that book has an unusually scholarly readership, most of the cost of printing and distributing 120 pages were wasted. Printing that book without the citations, but also publishing a diskette, Kindle, or website -- containing nothing but citations --would produce considerable savings for the publisher and reader, and they ought to be willing to pay for it. Unfortunately, there is resistance to anything new, and you may have to do it both ways until the idea catches on. The cost should include the right to some recognizable copy mark on the book, signifying this feature is available. Bowker and advertisers should be encouraged to use something smaller but similar. In that way, the concept can be advertised in advance of actual market penetration.

Some thought should be given to making some use of the searchability of such a bibliography. At the negligible cost, it can be resorted and listed in a wide variety of options(author, date, publication source), since the incremental cost of such additional material is minimal. For example, identifying all the citations available at one location should assist the scholar in deciding where to pursue his work. Perhaps there are ways to produce it which would help the librarian locate the material within the library or to pick out material in the same location before moving on.

|

| ABE Books |

3. Widen the availability of the text of primary sources. Much of this would be of interest to the non-professional reader if he could get to it more easily, and it would enlarge his sense of participating in the interpretation or "buy in". Unfortunately, most photocopying is still of poor quality, and the most useful version of the original is to use a keyboard. Therefore, I recommend searching for ways to induce the scholar to do it for you; if he really thinks it is an important document, would he please keyboard it for everyone else. If a way is provided for counting the number of "hits" on a document's citations, it will lead you to the popular documents, to begin with. Please don't try to start with "A" and end with "Z". After you have produced digitized copy, then photocopy it if you wish. The local Athenaeum makes quite a lot of revenue from selling reprints of architectural drawings, so there are exceptions.

4. Do not limit yourself to primary sources. There are copyright issues here, but a link to Amazon will get you revenue from Google, and a used copy of a book from ABEbooks will be delivered to your home by United Parcel Service. It's often cheaper than parking near a library.

REFERENCES

| Thomas Jefferson: The Art of Power: Jon Meacham: ISBN: 978-1400067664 | Amazon |

Rearranging Mickles, Makes Muckles

|

| Right Angle |

Thank you, Mr. President. Tonight I'm to discuss Right Angle Club's odd contribution to book publishing, with emphasis on history books. To make it easier for the audience to follow, I originally titled the speech Three Threes, for three meanings, in three categories each. It touched -- in threes -- on 1) book technology, 2) variable proportions of facts and opinion, and 3) pitfalls to success. Times three, made nine bases to touch, so that evening at the Philadelphia Club I had to hustle. For a reading audience, however, it's more comfortable to revert to the writing style which I am advocating, since it illustrates as it explains.

|

| Philadelphia-Reflections |

First, let's deconstruct the essay you are reading. Ten years ago, I began taking notes at the Right Angle Club's Friday lunches, writing reviews of the speakers. The younger generation calls it "blogging" when you then broadcast such essays on the Internet; that's how I started the journey, giving it the title of Philadelphia Reflections, which is reached by entering www.Philadelphia-Reflections.com/topic/233.htm in the URL box of any search engine, on any browser. If you prefer, you can simply click on the underlined lettering in this blog, a process called "linking". The blogs in this website concentrate on the Philadelphia "Scene" because that's what our speakers usually do. Our club has met since 1922, so by rights, it might contain ten thousand blogs, but unfortunately, I arrived late, so we only have 3000. Call them 3000 beads in a bowl, subdivided into annual bowls of beads. After more accumulation, they were re-connected as Collections for ease of use in re-assembly. Sometimes they are chronological, sometimes by subject matter; the purpose is to expedite searching for things, placing them in temporary categories, ultimately producing a book, a speech, or an essay. When an archive grows to this size, it's surprising how easy it is to lose something you know you have written.

|

| The Right Angle Book |

After a while themes emerge, so if I picked similar beads from the combined bowl of four hundred, then groups of similar beads become necklaces. Just as green beads make a green necklace, blogs about Ben Franklin make up a Franklin topic. Selected but related necklaces can then be artfully arranged into a rope of necklaces, which is to say, a book about Philadelphia, from William Penn to Grace Kelly. Almost without realizing it, I had been writing quite a big book without foreseeing where it would go. I described this curiosity to my computer-savvy son, who wrote a computer program to make it simple for anyone to rearrange blogs and topics of aggregated material. Simple, that is, for an author re-arrange his random thoughts repeatedly, as if to wander among his own random thoughts, and only in retrospect extract the books. Most authors are not Scotsmen, but they eventually learn that Many a Mickle -- Makes a Muckle. Many authors, however, once imagined they sat down to write a book from start to finish, only to discover one was already there, waiting to emerge if the Mickles could be re-arranged. At the end of the process, it is of course often necessary to insert a few bridging sentences to smooth out the lumps. However, when this outcome hasn't been planned, most authors must totally rewrite their material into a coherent book, once they finally realize where it has been going. Since I am also in the publishing business in a small way, I was able to guide my son into automating several subsequent steps of conventional publishing so any author could perform them without a publisher.

That begins to make book authorship resemble fine art; the artist does it all except marketing. In marketing fine art, it is conventional for the artist to receive 50% of the retail price, whereas in book publishing an author only receives 10%, and must sell five books to achieve an equal result. With refinement, using this system of authorship promises to incentivize the writing of many superior books, in smaller individual numbers, but an overall greater reading audience. True, "file this program" also aspires to eliminate the whole old-fashioned assembly line of publishing, which is in the process of dying anyway, but without a useful replacement.

So here's the cluster of three components -- blogs re-assembled into topics, topics re-assembled into volumes, with copy editing, spell checking and book design mostly performed by the computer. Whether paper books will completely transform into e-books is not so certain, but will mainly depend on computer design, not book design. At the moment, e-books are making their greatest progress in fiction books, for technical reasons. No doubt their negligible production costs will promote the emergence of vanity publishing, works of transient interest, and otherwise unsalable books. For this to work, will require not merely a further computer revolution, but a revolution within information circles. I'm afraid I am too old to indulge in such distant visions and can only hope to advance it a stage or two.

Rossperry1

Rossperry4

1250Gulph

f166f166

Xy34Ty12

July 23,2017

LastPass--call George

More, or Fewer, Raisins in the Pudding

|

| manuscripts |

Some book publishers do indeed regard history as handfuls of paper in a manuscript package, mostly requiring rearrangement in order to be called a book. Librarians are more likely to see historical literature dividing into three layers of fact mingled with varying degrees of interpretation: starting with primary sources, which are documents allegedly describing pure facts. Scholars come into the library to pore over such documents and comment on them, usually to write scholarly books with commentary, called secondary sources . Unfortunately, many publishers reject anything which cannot be copyrighted or is otherwise unlikely to sell very well, however important historians say it may be. Authors of history who make a living on it tend to focus on the general reading public, generating tertiary sources, sometimes textbooks, sometimes "popularized" history in rising levels of distinction. Sometimes these authors go back to original sources, but much of their product is based on secondary sources, which are now much more reliable because of the influence of a German professor named Leopold von Ranke. Taken together, you have what it is traditional to say are the three levels of historical writings.

|

| Leopold von Ranke |

Leopold von Ranke formalized the system of documented (and footnoted) history about 1870, vastly improving the quality of history in circulation by insisting that nothing could be accepted as true unless based on primary documents. Von Ranke did in fact transform Nineteenth-century history from opinionated propaganda in which it had largely declined, into a renewed science. At its best, it aspired to return to Thucydides, with footnotes. That is, clear powerful writing, ultimately based on the observations of those who were actually present at the time. Unfortunately, Ranke also encased historians in a priesthood, worshipping piles of documents largely inaccessible to the public, often discouraging anyone without a PhD. from hazarding an opinion. The extra cost of printing twenty or thirty pages of bibliography per book is now a cost which modern publishing can ill afford, making modern scholarship a heavier task for the average graduate student because the depth of scholarship tends to be measured by the number of citations, but incidentally "turning off" the public about history. All that seems quite unnecessary since primary source links could be provided independently (and to everyone) on the Internet at negligible cost. And supplied not merely to the scholar, but to any interested reader, no need to labor through citations to get at documents in a locked archive.

|

| E-Books |

The general history reader remains content with tertiary overviews, and a few brilliant secondary ones because document fragility bars public access to primary papers, but many might enjoy reading primary sources if they were physically more available. The general reader also needs impartial lists of "suggested reading", instead of the "garlands of bids", as one wit describes the bibliographies employed by scholars. If you glance through the annual reports of the Right Angle Club, you will see I have increasingly included separate internet links to both secondary as well as primary sources, because the bibliographies within the secondaries lead back to the primaries. Unfortunately, you must fire up a computer to access these treasures. The day soon approaches when scholars can carry a portable computer with two screens, one displaying the historian's commentary while the second screen displays related source documents. It seems likely history on paper will persist while that remains cheaper, but also because e-books make it hard to jump around. Newspapers and magazines particularly encounter this obstacle, because publication deadlines give them less time for artful re-arrangement. E-books are sweeping the field in books of fiction because fiction is linear. Non-fiction wanders around, even though this subtlety is often unappreciated by computer designers.

Prisoners in the Stone

|

| A Prisoner of the Stone |

The first revolution in book authorship has already taken place. Almost all manuscripts arrive on the publisher's desk as products of a home computer. In fact, many publishers refuse to accept manuscripts in any other form. Not only does this eliminate a significant typing cost to the publisher, but it allows him to experiment with type fonts and book design as part of the decision to agree to publish the book. What's more, anyone who remembers the heaps of paper strewn about a typical editor's office knows what an improvement it can be, just to conquer the trash piles. To switch sides to the author's point of view, composing a book on a home computer has greatly facilitated the constant need for small revisions. An experienced author eventually learns to condense and revise the wording in his mind while he is still working on the first draft. However, even a novice author is now able to pause and select a more precise verb, eliminate repetition, tighten the prose. He can go ahead and type in the sloppy prose and then immediately improve it, word by word. The effect of this is to leave less for the copy editor to do, and to increase the likelihood the book will be accepted by the publisher. The author is more readily able to see how the book looks than he would have been with mere typescript.

It must be confessed, however, that a second revolution caused by the computer has already come -- and gone. Not so long ago, I once linked primary documents already on the Internet to the appropriate commentary within my blog. The idea was to let the reader flip back and forth to the primary sources if he liked, and without interrupting the flow of my supposedly elegant commentary. In those early days of enthusiasm, volunteers were eager to post source material into the ether, just asking for someone to read it eagerly. I had linked up Philadelphia Reflections to nearly a thousand citations when an invincible flaw exposed itself. Historians were eager enough to post source documents, but not so eager to maintain them. One link after another was dropped by its author, producing a broken link for everyone else. The Internet tried to locate something which wasn't there, slowing the postings to a pitiful speed. Reluctantly, I went through my web site, removing broken links and removing most links. Maybe linking was a good idea, but it didn't work. There is thus no choice but to look to institutional repositories for historical storage, and funding to pay for maintaining availability for linkage. It is not feasible to free-load, although only recently it had seemed to be.

This pratfall assumed technology would provide more short-cuts than in fact it would. A more difficult obstacle emerges after some thought given to the nature of writing history because it seems remarkably similar to Michelangelo's description of how to carve a statue. Asked how to carve, he said it was simple. "Just chip away the stone you don't want, and throw it away." Michelangelo saw statues as "prisoners of the stone" from which it was carved, while insights and generalizations of history emerge from a huge mass of unsorted primary documents. But there is a different edge to writing history. Uncomfortably often, the process of writing history is one of disregarding documents which fail to support a certain conclusion, often documents which send inconvenient messages to modern politics. Carried too far, de-selecting disagreeable documents amounts to attempting to destroy alternative viewpoints. By this view of it, what the author chooses to disregard, is then as important as what he chooses to include. Unless awkward linkages are consciously maintained in some form, they will soon enough disappear by themselves. It is a great fallacy to assume that ancient history can be isolated from current politics, or even to believe that history teaches the present. Often, it is just the other way around.

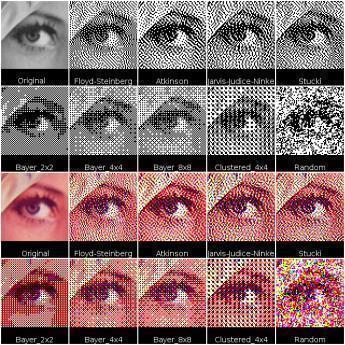

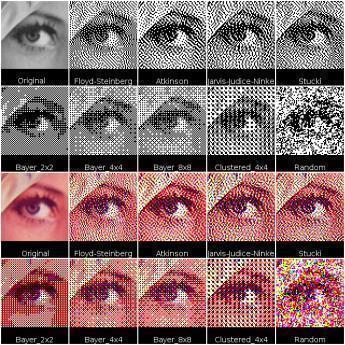

Dithering History

Dithering is originally a photographic term, referring to the process of smoothing out rough parts of an excessively enlarged photo. Carried over to the profession of writing history, the term alludes to smoothing over the rough parts of a narrative with a little unacknowledged conjecturing.

In photography, when a picture is enlarged too far, it breaks apart into bits and pieces. Dithering fills in the blank gaps between "pixels" actually recorded by the camera. It amounts to guessing what a blank space should look like, based on what surrounds its edges. In the popular comic strip, Blondie's husband Dagwood works for an explosive boss called Mr. Dithers, who "dithers" between outbursts, when he vents his frustration. But that's to dither in the Fifteenth-century non-technical sense, meaning to hesitate in an aimless trembling way. If the cartoonist who draws Blondie will forgive me, I have just dithered in the sense I am discussing in history. I haven't the faintest idea what the cartoonist intended by giving his character the name of Mr. Dithers, so I invented a plausible theory out of what I do know. That's what I mean by a third new meaning for either, in the sense of "plausible but wholly invented". It's fairly common practice, and it is one of the things which Leopold von Ranke's insistence on historical documentation has greatly reduced.

To digress briefly about photographic dithering, the undithered product is of degraded quality, to begin with. Dithering systematically removes the jagged digital edge, and restores the original analog signal. In that case, dithering the result brings it back toward the original picture. That's not cheating, it's the removal of a flaw.

Dithering of history comes closer to resembling a different photographic process, which samples the good neighboring pixels on all sides of a hole and synthesizes an average of them to cover the hole. It works best with four-color graphics, converting them to 256-color approximations. But the key to all photographic approaches is to apply the same formula to all pixel holes. That's something a computer can do but a historian can't, and historical touch-ups seem less legitimate because the reader can't recognize when it has happened, can't reverse it by adding or removing a filter. Dithering may somewhat improve the readability of the history product occasionally, usually at the expense of inaccuracy, sometimes large inaccuracy. It's conventional among more conscientious writers to signal what has happened by signaling, "As we can readily imagine Aaron Burr saying to Alexander Hamilton just before he shot him." That's less misleading than just saying "Burr shouted at Hamilton, just before he blew his brains out." The latter sends no signal or footnote to the reader, except perhaps to one who knows that Hamilton was shot in the pelvis, not the head. In small doses, dithering may harmlessly smooth out a narrative. But there's a better approach, to write history in short blogs of what is provable, later assembling the blogs like beads in a necklace. Bridges may well need to be added to smooth out the lumps, but that becomes a late editorial step, consciously applied with care. And consequently, author commentary is more likely to recognized as commentary, rather than rejected as fiction.

Dithering the holes is often just padding. It would be better to spend the time doing more research.

Ruminations About the Children's Education Fund (3)

The Right Angle Club runs a weekly lottery, giving the profits to the Children's Educational Fund. The CEF awards scholarships by lottery to poor kids in the City schools. That's quite counter-intuitive because ordinarily most scholarships are given to the best students among the financially needy. Or to the neediest among the top applicants. Either way, the best students are selected; this one does it by lottery among poor kids. The director of the project visits the Right Angle Club every year or so, to tell us how things are working out. This is what we learned, this year.

The usual system of giving scholarships to the best students has been criticized as social Darwinism, skimming off the cream of the crop and forcing the teachers of the rest to confront a selected group of problem children. According to this theory, good schools get better results because they start with brighter kids. Carried to the extreme, this view of things leads to maintaining that the kids who can get into Harvard, are exactly the ones who don't need Harvard in the first place. Indeed, several recent teen-age billionaires in the computer software industry, who voluntarily dropped out of Harvard seem to illustrate this contention. Since Benjamin Franklin never went past the second grade in school perhaps he, too, somehow illustrates the uselessness of education for gifted children. Bright kids don't need good schools or some such conclusion. Since dumb ones can't make any use of good schools, perhaps we just need cheaper ones. Or some such convoluted reasoning, leading to preposterous conclusions. Giving scholarships by lottery, therefore, ought to contribute something to educational discussions and this, our favorite lottery, has been around long enough for tentative conclusions.

|

Just what improving schools means in practical terms, does not yet emerge from the experience. Some could say we ought to fire the worst teachers, others could say we ought to raise salary levels to attract better ones. Most people would agree there is some level of mixture between good students and bad ones. At that point, the culture mix becomes harmful rather than overall helpful; whether just one obstreperous bully is enough to disrupt a whole class or something like 25% of well-disciplined ones would be enough to restore order in the classroom, has not been quantitatively tested. What seems indisputable is that the kids and their parents do accurately recognize something desirable to be present in certain schools but not others; their choice is wiser than the non-choice imposed by assigning students to neighborhood schools. Maybe it's better teachers, but that has not been proved.

|

It seems a pity not to learn everything we can from a large, random experiment such as this. No doubt every charity has a struggle just with its main mission, without adding new tasks not originally contemplated. However, it would seem inevitable for the data to show differences in success among types of schools, and among types of students. Combining these two varieties in large enough quantity, ought to show that certain types of schools bring out superior results in certain types of students. Providing the families of students with specific information then ought to result in still greater improvement in the selection of schools by the students. No doubt the student gossip channels already take some informal advantage of such observations. Providing school administrations with such information also ought to provoke conscious improvements in the schools, leading to a virtuous circle. Done clumsily, revised standards for teachers could lead to strikes by the teacher unions. Significant progress cannot be made without the cooperation of the schools, and encouragement of public opinion. After all, one thing we really learned is that offering a wider choice of schools to student applicants leads to better outcomes. What we have yet to learn, is how far you can go with this idea. But for heaven's sake, let's hurry and find out.

History Writing and History Teaching

The conventional pattern of testing history students sounds a lot like the pattern of writing history. Daily or weekly quizzes, followed by tests at the end of subject material, followed in turn by final examinations of a whole course; it all sounds like blogs for events, chapters for topics, and books for an overall subject, as we have earlier proposed as the pattern for publishing history. It would probably be an endless argument as to whether teaching is patterned after authorship or the other way around since nowadays most books of history are written by professors who are paid to teach. Furthermore, every lecturer quickly learns how organizing a lecture and responding to questions will sharpen the insights of authorship. Those who pursue two trades simultaneously will usually adopt similar patterns for both, regardless of which is the primary source of income.

There is one difference, however. Ever since von Ranke made footnotes and primary source material the essential hallmarks of historical scholarship, academic history has acquired a certain rigor which talking to an audience of late teenagers does not encourage, may even discourage. Professors with long hair and blue jeans are attempting to merge with the audience, and those who promote them are wise to withhold tenure until it is established that standards are being met, particularly in grade inflation. There is even an undercurrent to the repeated complaint that publication is being overvalued by comparison with teaching skill. In an unmonitored classroom, it can be difficult to know whether presentations are politically neutral, fact-based and restrained. Publications and citations can at least be judged by those standards. But that may be the least of it.

|

| Thucydides and Machiavelli |

History to a large extent is based on secondary and tertiary sources, and what the student retains is a summation of filtered interpretations. From what the students learn, the culture learns, possibly filtered again by graduates who have become columnists and anchormen. Professors are a vital link in society's chain of opinion formation, eventually reaching the politician who raises his hand to vote in a legislative body. Whether that politician is responding to the myth of George Washington and the cherry tree or to the generalizations of Thucydides and Machiavelli -- makes a difference, even though George Washington makes a better role model than Machiavelli. The point is that academic lectures cannot easily employ footnotes to primary sources, as secondary sources regularly are expected to do. And they are only one example of several sources of community opinion which could profit from lessening the doubts of their critics.

REFERENCES

| Political Theory Classic and Contemporary Readings, Vol. 1: Thucydides to Machiavelli: Joseph Losco (Editor), Leonard A. Williams (Editor): ISBN: 978-1891487910 | Amazon |

New Looks for College?

|

| Kevin Carey |

The New York Times ran an article by Kevin Carey on March 8, 2015, predicting such big changes ahead for colleges, bringing an end of college as we know it. A flurry of reader responses followed on March 15, making different predictions. Since almost none of them mentioned the changes I would predict, I now offer my opinion.

|

| Cambridge University |

Colleges have responded to their current popularity, mostly by building student housing and entertainment upgrades, presumably to attract even more students. What I am seeing seems to be a way of taking advantage of current low-interest rates with the type of construction which can hope for conventional mortgages or even sales protection, in the event of a future economic slump. In addition, they are admitting many more students from foreign countries, probably hoping not to lower their standards for domestic admissions. They probably hope to establish a following in the upper class of these countries, eventually enabling them to maintain expanded enrollments by lowering standards for a worldwide audience of students, rather than merely a domestic one. With luck, that might lead to an image of superiority for American colleges, even after the foreign nations eventually build up their standards. The example would be that of Ivy League colleges sending future Texas millionaires back to Texas, which now maintains an aura of superiority for Ivy League colleges, well after the time when competing Texas colleges are themselves well-funded. The Ivy League may even be aware of the time when the Labor Party was in power in England, and for populist reasons deliberately underfunded Oxford and Cambridge. American students kept arriving anyway, seeking prestige rather than scholarship.

|

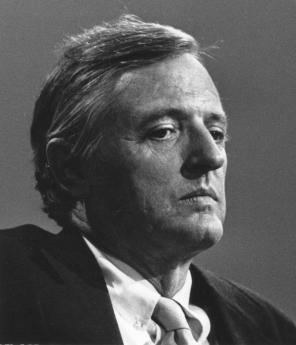

| William F. Buckley Jr |

Television courses seem to be a different phenomenon. A good course is a hard course, so a superior television course will prove to be even harder. In fact, it might be said the main purpose of college is to teach students how to study; the graduates of first-rate private schools find college to be rather easy, providing them with extra time for extra-curricular activities which are not invariably trivial. I well remember William F. Buckley Jr, pouring out amazing amounts of written prose for the college newspaper and other outlets, in spite of carrying a rigorous academic workload. I feel sure he did not acquire that talent in college, but rather, came to Yale, already loaded for Bear. I am certain I do not know what future place tape-recorded classes will eventually assume, but I do feel such courses would be most useful for graduate students, who have already learned how to study in solitude.

To return to the excess of dormitories under construction, the approaching surplus of them might also lead to better use, which is for faculty housing and usage. An eviction of students from dormitories would lead to urban universities beginning to resemble London's Inns of Court in physical appearance, with commuting day-students, mostly attending from nearby. The day is past, although the students do not believe it, that there is very much difference between living in Boston and living in California, and the much-touted virtue of seeing a new environment will eventually lose its charm. It may all depend on how severely a decline in economics retards the traditional pressure to escape parental control, but at least it is possible to foresee at least one improvement which could result from fiscal stringency.

Millennials: The New Romantics?

|

| Romantic Era |

It was taught to me as a compliant teenager that the Enlightenment period (Ben Franklin, Voltaire, etc.) was followed by the Romantic period of, say, Shelley and Byron. Somehow, the idea was also conveyed that Romantic was better. Curiously, it took a luxury cruise on the Mediterranean to make me question the whole thing.

It has become the custom for college alumni groups to organize vacation tours of various sorts, with a professor from Old Siwash as the entertainment. In time, two or three colleges got together to share expenses and fill up vacancies, and the joint entertainment was enhanced with the concept of "Our professor is a better lecturer than your professor", which is a light-hearted variation of gladiator duels, analogous to putting two lions in a den of Daniels. In the case I am describing, the Harvard professor was talking about the Romantic era as we sailed past the trysting grounds of Chopin and George Sand. Accompanied by unlimited free cocktails, the scene seemed very pleasant, indeed.

|

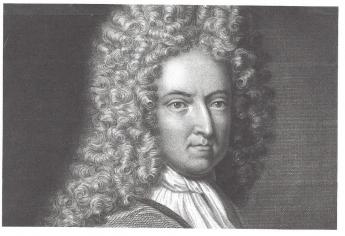

| Daniel Defoe |

In the seventy years since I last attended a lecture on such a serious subject, it appears the driving force behind Romanticism is no longer Rousseau, but Daniel Defoe. Robinson Crusoe on the desert island is the role model. Unfortunately for the argument, a quick look at Google assures me Defoe lived from 1660 to 1730, was a spy among other things, and wrote the book which was to help define the modern novel, for religious reasons. His personal history is not terribly attractive, involving debt and questionable business practices, and his prolific writings were sometimes on both sides of an issue. He is said to have died while hiding from creditors. Although his real-life model Alexander Selkirk only spent four years on the island, Defoe has Crusoe totally alone on the island for more than twenty years before the fateful day when he discovers Friday's footprint in the sand.

|

| Robinson Crusoe |

But the main point of history was that Defoe was born well before William Penn and died before George Washington was born. The romanticism he did much to promote was created at least as early as the beginning of the Enlightenment and certainly could not have been a retrospective reaction to it. Making allowance for the slow communication of that time, it seems much more plausible to say the Enlightenment and the Romantic Periods were simultaneous reactions to the same scientific upheavals of the time. Some people like Franklin embraced the discoveries of science, and other people were baffled to find their belief systems challenged by science. While some romantics like Campbell's Gertrude of Pennsylvania, who is depicted as lying on the ocean beaches of Pennsylvania watching the flamingos fly overhead, were merely ignorant, the majority seemed to react to the scientific revolution as too baffling to argue with. Their reasoning behind clinging to challenged premises was of the nature of claiming unsullied purity. Avoidance of the incomprehensible reasonings of science leads to the "noble savage" idea, where the untutored innocent, young and unlearned, is justified to contest the credentialed scientist as an equal.

Does that sound like a millennial to anyone else?

"Sir"

In 1938 when I was 14 years old, I entered a new virtual country with its own virtual language. That is, I went to an all-male boarding school during the deepest part of the worst depression the country ever had.

|

| Boarding School |

identified While it should be noted I had a scholarship, there is little doubt I was anxious to learn and emulate the customs of the world I had entered. My life-long characteristic of rebellion was born here, but at first, it evoked a futile attempt to imitate. Not to challenge, but to adopt what I could afford to adopt. The afford part was a real one because the advance instructions for new boys announced a jacket and tie were required at all meals and classes, and a dark blue suit with a white shirt for Sunday chapel. That's exactly what I arrived with, and let me tell you my green suit and brown tie were pretty well worn out by the first Christmas when I came home on the train for ten days vacation, the first opportunity to demand new clothes. First-year students were identified by requiring a black cap outdoors, and never, ever, walking on the grass. The penalty for not obeying the "rhine" rules was to carry a brick around, and if discovered without a brick, to carry two bricks. But that's not what I am centered on, right now. The thing which really bothered me was unwritten, equally peer-pressured by my fellow students, the custom of addressing all my teachers as Sir. The other rules only applied until the first Christmas vacation, but the unwritten Sir rule proved to be life-long.

|

| Sir |

And it was complicated. It was Sir, as an introduction to a question, not SIR!, as a sign of disagreement. You were to use this as an introduction to a request for teaching, not as any sort of rebuke or resistance. Present-day students will be interested to know that every one of my teachers was a man; my recollection is, except for the Headmaster's secretary, the Nurse was the only other female employee. The average class size was seven. Seven boys and a master. Each session of classes was preceded by an hour of homework, the assignment for which was posted outside a classroom containing a large oval maple table. Needless to say, the masters all wore a jacket and tie, most of the finest style and workmanship. They always knew your name, and always called on every student for answers, every day. Masters relaxed a little bit during the two daily hours of required exercise, when they took off their ties and became the coaches, but were just as formal the following day in class. I had been at the head of the class of what Time Magazine called the finest public high school in America, but I nearly flunked out of the first semester in this boarding school. It was much tougher at this private school than I felt any school had a right to be, but they really meant it. Over and over, the Headmaster in the pulpit intoned, "Of those to whom much is given, much is expected."

I had some new-boy fumbles. Arriving a day early, I found myself with only a giant and a dwarf for a company at the dining table. I assumed the giant was a teacher, but he was a star on the varsity football team. And I assumed the dwarf was a student, but he was assistant housemaster. One was to become a buddy, the other a disciplinarian, but I had them reversed, calling the student "Sir", but the master by his first name. Bad mistake, which I have been reminded of, at numerous reunions since then.

|

| Yale |

When I later got to Yale, I began to see the rules behind the "Sir," rule. In the first place, all of the boarding school graduates used it, and none of the public school graduates, although many of the public school alumni began, falteringly, to imitate it. Without realizing it, a three-year habit had turned out to be a way of announcing a boarding school education. The effect on the professors was interesting; they rather liked it, so it was reinforced. It had another significance, that the graduates who said "Sir" acquired upper-class practices, the red-brick fellows seldom did. The only time I can remember it's being scorned was eight years later, by a Viennese medical professor with a thick accent, and he was obviously puzzled by the significance. Hereditary aristocracy, perhaps. Indeed, I remember clearly the first time I was addressed as Sir. I was an unpaid hospital intern, but the medical students of one of the hospital's two medical schools flattered me with the term. In retrospect, I can see it was a way of announcing that graduates of their medical school knew what it meant, while the other medical school was just red-brick. Although the latter had mostly graduated from red-brick colleges, their medical school aspired to be Ivy League.

If you traveled in Ivy League circles, the Sir convention was pretty universal until 1965, when going to school tieless reached almost all college faculties, thus extending permission to students to imitate them. Perhaps this had to do with co-education, since the sir tradition was never very strong in women's colleges, and denounced by the girls when the men's colleges went co-ed. Perhaps it had to do with the SAT test replacing school background as the major selection factor for admission. Perhaps it was the influx of central European students, children of European graduates for whom an anti-aristocratic posture was traditional, and until they came to America, largely futile. Perhaps it was economic. The American balance of trade had been positive for many decades before 1965; afterward, the balance of trade has been steadily negative.

In Shakespeare's day, "Sirrah" was a slur about persons of inferior status. In Boswell's eighteenth Century day, his Life of Johnson immortalized his characteristic put-down with a one-liner. It survives today as a virtually text-book description of how to dominate an argument at a boardroom dispute. "Why, Sir," was and remains a signal that you, you ninny, are about to be defeated with a quip. It's a curious revival of a new way of immortalizing small-group domination, and a very effective one at that, which even the soft-spoken Quakers use effectively. Whatever, whatever.

The 90-plus years of tradition of addressing your professor as "Sir," is gone, probably for good, except among those for whom it is a deeply ingrained habit. Along with the tradition of female high school teachers, followed I suppose by male college professors.

13 Blogs

Nanoparticles: The Dwarfs are Coming

A lot of basic science will have to be revised when we fully understand what happens to particles after they get small enough.

A lot of basic science will have to be revised when we fully understand what happens to particles after they get small enough.

Evo-Devo

The Franklin Institute has been making awards for scientific achievement for 188 years, long before the Nobel Prize was invented. The seats are all reserved before the meeting notices are even mailed.

The Franklin Institute has been making awards for scientific achievement for 188 years, long before the Nobel Prize was invented. The seats are all reserved before the meeting notices are even mailed.

Wistar Institute, Spelled With an "A"

The Wistar Institute is properly pronounced "Wister", but in fact it's all the same family. Its fame in biomedical research makes that quite irrelevant.

The Wistar Institute is properly pronounced "Wister", but in fact it's all the same family. Its fame in biomedical research makes that quite irrelevant.

Revenue Stream for Historical Documents

New blog 2013-01-10 17:45:38 description

New blog 2013-01-10 17:45:38 description

Rearranging Mickles, Makes Muckles

Many a mickle, makes a muckle. Re-arranging the mickles makes better buckles.

Many a mickle, makes a muckle. Re-arranging the mickles makes better buckles.

More, or Fewer, Raisins in the Pudding

The primary sources of history are supposed to be factual. Tertiary history is mostly an interpretation of many primary bits.

The primary sources of history are supposed to be factual. Tertiary history is mostly an interpretation of many primary bits.

Prisoners in the Stone

History emerges from a welter of facts, with the irrelevant and inconvenient material selectively removed.

History emerges from a welter of facts, with the irrelevant and inconvenient material selectively removed.

Dithering History

It's better to start writing history with what you know for certain. And it's usually better to stop there, too.

It's better to start writing history with what you know for certain. And it's usually better to stop there, too.

Ruminations About the Children's Education Fund (3)

By selecting children for scholarships by lottery, it emerges that different schools make big differences.

By selecting children for scholarships by lottery, it emerges that different schools make big differences.

History Writing and History Teaching

Teaching history is remarkably similar to writing history, at least in its pattern of construction. But there is one exception.

Teaching history is remarkably similar to writing history, at least in its pattern of construction. But there is one exception.

New Looks for College?

Many people predict big changes are in store for Universities. Here's my take on it.

Many people predict big changes are in store for Universities. Here's my take on it.

Millennials: The New Romantics?

The romantic period of literature is said to have followed the Enlightenment. Maybe they were just different people at the same time.

The romantic period of literature is said to have followed the Enlightenment. Maybe they were just different people at the same time.