0 Volumes

No volumes are associated with this topic

Thinking About Thought

There's a yawning gap between concepts of the mind, and concepts of brain function.

Civilization advances in many ways at the same time. For thousands of years, it has seemed impossible to unify what we know about the mind with what we know about brain function, so we don't really attempt it. We also put to work what we know about culture, independently of what we know about psychiatry, psychology, brain physiology, neurology, mathematics, intelligence, and several dozen categories of scholarship. However desirable it might be to unify these aspects of human brain function, our understanding is unequal to the task; we specialize, waiting for unifying developments to emerge.

Recently, I enjoyed the experience of being the oldest tuition-paying student of a Yale University venture into what they mysteriously call Directed Studies, a mixture of history, philosophy, and literature. In this case, these coordinated searchlights simultaneously lit up the culture of ancient Greece. Just why these three academic disciplines were selected and others like mathematics, neurophysiology and psychiatry omitted, was not discussed. The unlikeliness of a productive seminar emerging from a group of psychiatrists intermixed with computer scientists and neurophysiologists seems clear enough. Perhaps the reason for selecting history, philosophy and letters is no more complicated than to make a simple start with three components within the department of Humanities, that department which awards a B. A. degree. At least that grouping might reduce the usual furor about boundaries, normally banished to the Curriculum Committee.

In any event it was evident from the first moment, that the students of this particular seminar for grown-ups were self-selected lifetime students of whatever subject comes up. Their nostalgia was for the good old days of overwhelming homework assignments and competition for teacher's attention. Forever obedient to the laws of studenthood, they were not likely to challenge the rules of today's game. Its unspoken premises seemed to be: That a nation's great literature often conveys its kernel of passion better than its history does. That its history nevertheless discusses the nation's great triumphs and failures, and thus disciplines the inevitable bravado of its literature. And finally, that a culture's philosophy exposes the limits of its self-understanding, which is what ultimately defines the outer limits of its aspirations, perhaps even its achievements.

Professor Kagan, who seems to have originated this academic hybrid, may well give a different description of it. The following fugitive leaves display my own observations, which in eighty-six yearsfull of experience have frequently fallen short of perfection.

George Ross Fisher MD July, 2011

Methods of Thought: Scientific, Logical, Future

|

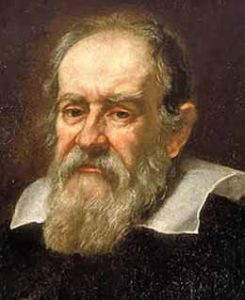

| Galileo |

Early in the Sixteenth century -- some say it was Galileo who started it -- the Scientific Method appeared. A hypothesis was generated, then subjected to experiment. Flaws were detected, the hypothesis re-modified, then tested again. The central characteristic of truth was established: reproducibility. The same test should always yield the same result in the Scientific Method.

A few decades later someone -- probably Sir Francis Bacon -- applied a modified Scientific Method to the common law. What seemed like a good remedy for a dispute was tested in the courts by entertaining appeals of similar cases, to test how the remedy seems to turn out. The reliance could not be on systematic experiments, so it had to rely on nature's experiments. Of the two disciplines, the scientific thesis-hypothesis-new thesis came to rest with firmer conclusions sooner than the common law did because scientists could devise systematic tests. A Judge, on the other hand, must wait for roughly the same circumstances to come up again before reproducibility can be determined, and that sometimes takes decades. Since a Judge can scarcely be expected to arrange his cases experimentally, non-comparable circumstances are always potentially present.

In both Experimental science and the Law, however, increased velocity --progress -- is an independent variable. It was celebrated enough during the Enlightenment to embolden public acceptance -- not merely of the validity of the Scientific Method, but -- of science itself and the Law itself. During those three hundred years after Galileo dropped bullets of differing sizes down the Tower of Pisa, rapid progress created a new respect for scientists and lawyers. Naturally, that led to lessened prestige for priests and kings, hence disruptions of existing power arrangements. History was the score-keeper of these turmoils, but History itself also became a variant of the scientific method. Unfortunately more than even the Law, History had to wait long periods for natural experiments to present themselves. Unfortunately, also, the effect of history on politics created an incentive for misrepresentation, now one of its main weaknesses.

Meanwhile untested Philosophy languished. The human uterus was not bicornuate, as anyone could see by examining one. Understanding only crept forward in a petty pace among logical philosophers; a century was brief in their vocabulary. For their part, Religions made a poor showing compared with the Common Law, particularly when advocating patterns of behavior. Religions were too rigid, too mysterious in their conclusions, provided too little guidance and too much grief. Too often the object seemed to be a skill in making the worse appear the better reason or acquiring detestable techniques of Oxford debating societies, where framing arguments in sly ways advances inappropriate victories. Logic acquired a bad name when its practitioners could easily be seen to prefer victory for the debater over advances for truth. And yet it does seem much too soon to discard logic and debate; indeed, they may be on the threshold of their finest hour.

A professor of mathematics of one of America's greatest scientific graduate schools, recently expressed his confusion about the nature of logic. Experiencing platoons of math graduate students marching past his blackboard, he commented on the extraordinary diligence of mostly foreign-born students, perfectly content to spend twenty daily hours studying to achieve distinction. "And yet," he said, "Every year there are two or three who never come to class, except to take the test. And they always get the highest possible grades." His inability to discuss the inner workings of such minds was related to the fact that he seldom met them in person. That such logical prodigies are not rare is also seen at chess tournaments, where players of no particular professional distinctiveness are to be observed moving among the chessboards of twenty opponents, occasionally even doing it blind-folded. The rest of us cannot hope to match such performances ourselves, so we are not entitled to scoff at the achievement, or belittle its potential for re-revolutionizing the science of thought.

|

| Bill Gates |

Academia sometimes expresses and should express more often, its discomfort that Bill Gates and a number of other certifiable geniuses have found colleges disagreeable, and drop out. When one of them becomes the richest man in the world before he is forty, at least it becomes necessary to acknowledge that his talents are not a one-trick pony. These people seem to be differentially attracted to computer science, perhaps instinctively recognizing that machines seem capable of exceeding the largest human memory, and their integrated processors sit idly waiting for some genius to put them to work. Even today, with mere mortals writing the programs, we are informed that seventy percent of stock market transactions are conducted between two machines, operating unattended. Perfecting stock transactions may well be approaching its useful limits, but potentials for improving the world's daily activities are so vast that grammar school children could easily list a hundred possibilities. What children cannot do is devise something to motivate a man to remain productive, after he already has fifty billion personal dollars. And another thing children cannot do is describe an educational system appropriate for such extraordinary people when they presently find nothing in the classroom worth their attention. We are giving up the Space Program as not worth its cost. Well, here's a goal which is obviously worth its cost, if only to stay ahead of international competitors in an atomic age.

Rise and Fall of Books

| ||

| The Library Company of Philadelphia |

John C. Van Horne, the current director of the Library Company of Philadelphia recently told the Right Angle Club of the history of his institution. It was an interesting description of an important evolution from Ben Franklin's original idea to what it is today: a non-circulating research library, with a focus on 18th and 19th Century books, particularly those dealing with the founding of the nation, and, African American studies. Some of Mr. Van Horne's most interesting remarks were incidental to a rather offhand analysis of the rise and decline of books. One suspects he has been thinking about this topic so long it creeps into almost anything else he says.

|

| Join or Die snake |

Franklin devised the idea of having fifty of his friends subscribe a pool of money to purchase, originally, 375 books which they shared. The members were mainly artisans and the books were heavily concentrated in practical matters of use in their trades. In time, annual contributions were solicited for new acquisitions, and the public was invited to share the library. At present, a membership costs $200, and annual dues are $50. Somewhere along the line, someone took the famous cartoon of the snake cut into 13 pieces, and applied its motto to membership solicitations: "Join or die." For sixteen years, the Library Company was the Library of Congress, but it was also a museum of odd artifacts donated by the townsfolk, as well as the workplace where Franklin conducted his famous experiments on electricity. Moving between the second floor of Carpenters Hall to its own building on 5th Street, it next made an unfortunate move to South Broad Street after James and Phoebe Rush donated the Ridgeway Library. That building was particularly handsome, but bad guesses as to the future demographics of South Philadelphia left it stranded until modified operations finally moved to the present location on Locust Street west of 13th. More recently, it also acquired the old Cassatt mansion next door, using it to house visiting scholars in residence, and sharing some activities with the Historical Society of Pennsylvania on its eastern side.

|

| Old Pictures of the Library Company of Philadelphia |

The notion of the Library Company as the oldest library in the country tends to generate reflections about the rise of libraries, of books, and publications in general. Prior to 1800, only a scattering of pamphlets and books were printed in America or in the world for that matter, compared with the huge flowering of books, libraries, and authorship which were to characterize the 19th Century. Education and literacy spread, encouraged by the Industrial Revolution applying its transformative power to the industry of publishing. All of this lasted about a hundred fifty years, and we now can see publishing in severe decline with an uncertain future. It's true that millions of books are still printed, and hundreds of thousands of authors are making some sort of living. But profitability is sharply declining, and competitive media are flourishing. Books will persist for quite a while, but it is obvious that unknowable upheavals are going to come. The future role of libraries is particularly questionable.

Rather than speculate about the internet and electronic media, it may be helpful to regard industries as having a normal life span which cannot be indefinitely extended by rescue efforts. No purpose would be served by hastening the decline of publishing, but things may work out better if we ask ourselves how we should best predict and accommodate its impending creative transformation.

www.Philadelphia-Reflections.com/blog/1470.htm

Many Mickles in One Muckle

|

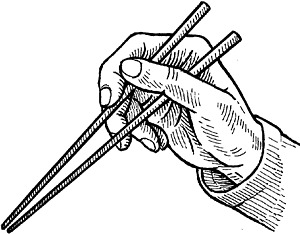

| Chopsticks |

As soon as computer processing was concentrated into one processor chip, it was apparent that faster chips were hotter than slow ones. Processor chips were therefore cooled with fans, even placed in refrigerated jackets. Eventually, final limits were reached, and one-chip processing could go no faster. Solution: add more processing chips.

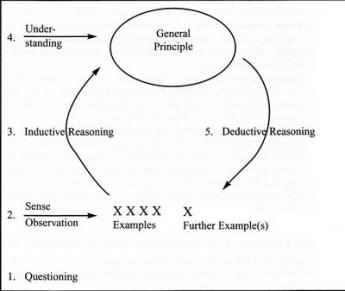

The human equivalent is called specialization. So far as we presently know, the processing power of the human brain is not limited by heat generation, but it does seem limited by something. Consequently, communal projects developed the pattern of isolating single functions assigned to specialists, then reconstructing the products of specialists into a completed pattern. A Philadelphia Quaker named Frederick Winslow Taylor developed this process to a high art. John D. Rockefeller then reduced it to an epigram: "Good leadership consists of showing ordinary people how to accomplish the work of superior people."

|

| Frederick Winslow Taylor |

As long as human brain architecture remains a rough guide to computer architecture, the deconstruction-reconstruction process retains the feature of enabling machines to take up where human guidance reaches its limit. Machine-organized thought will probably leap ahead whole orders of magnitude without human guidance, but until then the fortuitous limit of what one processor can do roughly matches the capacity limit of its human operator. That is, we are forced by other circumstances to organize computer programming on the assumption that most tasks could exceed the capacity of a single processor, so a supplementary hand-off must be provided for in every instruction set.

We have now reached the point where an inexpensive pocket computer can affordably contain much more memory capacity than the human brain. It is common to see office computers with two or four processor chips. It will not be long before computer engineers will provide us with both memory and processing power a thousand times greater than the human brain, And for a while, we will not know what to do with it.

But then we enter the really modern age. The time when computer design can no longer depend on the fortuitous processes of the human mind, for a useful pattern to follow.

Socratic Teaching

It's been sixty-three years since I last sat in a classroom as a student. For an additional fifty-five years, I was a teacher; in the absence of any correspondence to the contrary, I believe I am still listed on the faculty of two medical schools. Socratic teaching is now nearly universal, but I only got a smattering of it as a student. I hated it then, and I grumble about it, still. Just about the only thing I remember about my experience in fourth grade is the following question by the teacher: "Argentina grows wheat. Now, class, what does Argentina grow?" I refused to answer such a question.

It would be another ten years before I had a chance to read some Socratic dialogues, finally recognizing that many of the questions Socrates asked of his students were sarcastic, and even a few of his answers were mocking. After all, Socrates was judged a rebel and executed for promoting subversive thoughts among young and therefore impressionable students. The dialogues are not demonstrations of how to teach a class, they are semi-theatrical depictions of Plato's recollections of Socrates, heavily edited for the purpose of defending his reputation. The main modern criticism of Socrates is not that he made students squirm, but that he relied so exclusively on logic and reasoning. The truth of his teaching was based on plausibility, not testing and proof. Such pontification today would be viewed with skepticism; at most, it would be described as relying too heavily upon secondary sources, because there could certainly be no reference to primary ones. How would I know what Argentina grows? Or, for that matter, how do you know? One really has to pity that poor tormented teacher.

|

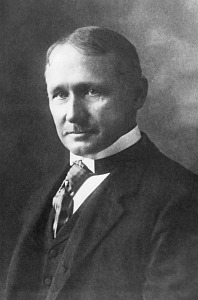

| Method |

One has less sympathy with current medical school faculties of New York City, who have largely taken up a denatured version of what they imagine was the Socratic Method, and which their students openly refer to as teaching by coercion. One swaggering professor ended his dialogue with one of my classmates, who in fact later won the Nobel Prize in Medicine, with the finale, "You won't forget that, will you?" To which the future Nobel laureate replied, "Sir, I won't forget you." And another somewhat less distinguished classmate was cringed beside the locked door of the examination room, studying notes right up to the last moment before a final exam. "Well, Levy," boomed the same professor, "Do you know everything about Rheumatic Fever?" To which was made the immortal response, "Sir, no one knows everything about rheumatic fever." By the time they get to be seniors, medical students are famously independent-minded, and they can give back as good as they get. So, one pities that professor less than that fourth-grade teacher, but questions the methodology more. One other mocking description the irreverent medical students give of it is: "Well, what am I thinking, today?"

Let's change the scene to cable television, to a continuous live presentation of current history called C-Span. Once a week, some famous teacher from anywhere in the country is depicted giving a real college seminar. One can easily imagine the editor of this program, with essentially unlimited budget, seeking out the best teachers in the best educational system in the world. On the evening in question, one charmingly pert young lady was performing on a topic I now do not recollect; it was, however, perfect Socratic dialogue. No matter how preposterous the answer, no student was ever wrong about anything. Encouraging smiles urged the student to try again, or stand aside for a classmate eager to recite. Like Molly Bloom, the teacher's responses were, "Yes, yes, yes".

|

| Socratic Diagram |

Within a few minutes, two seemingly unrelated mysteries got clarified somewhat. A possible explanation of the self-assurance of the "x" generation began to suggest itself. Those children of silent, withdrawn parents had been encouraged to believe their spontaneous instincts were oracular, as a way of encouraging the shy to assert themselves. Ten or twelve years of Socratic, "Yes, yes, yes" had half-convinced a sizable minority that their views were those of unsullied vestals, clearly to be preferred to those who relied on that famously undependable source, experience. It can take four or five years to recover. And watching TV another mystery seemed to expose some of its roots. For some years I have marveled at the manner of academics in charge of a meeting of peers. Ever since Thomas Jefferson presided over the unruly Senate at Sixth Street in Philadelphia, the Parliamentary rules have been strict: the chairman only votes to break a tie, and absolutely never engages in partisan debate. Yet repeatedly one watches these lovely people, often close friends, riding rough-shod over colleagues in a debate. Suddenly while watching TV focus on the best of them, some glimmering of understanding begins to emerge. The teacher states the proposition, asks the question of the audience, and hunts for someone to give the right answer. If the question is amended, it is referred to a committee which will think about it. If someone voices the right answer, it is cheered as having closed the topic to further discussion. Very few votes are ever taken.

It's impossible to crawl inside the heads of people, even your friends, and pick out their motives. But now, the Socratic skeptics at least have some idea where all this is coming from.

Robert Barclay Justifies Quaker Meetings

|

| Robert Barclay |

As part of the dissidence and Civil War of 17th Century England, Robert Barclay the Scotsman emerged with a point of view which was structured and reasoned in detail. What was almost unique was his reduction of it to a handful of pithy "Sound Bites". Coupled with membership in a prominent family, these abilities made him a particular friend of James, Duke of York, later King. Barclay became a Quaker at an early age.

The whole point of the Reformation was revulsion against the corrupt Catholic clergy, shielded behind some impossibly convoluted legalisms of doctrine. But for the governing establishment, any reform was going too far if it led to anarchy and chaos; combating disorder was then in many ways the central mission of the Catholic faith. The establishment did recognize that public revolt against universal micromanagement led to the scaffold for Kings who insisted on it. But in their view, the need for law and order still demanded some legitimacy, if not organized law. The Rangers, who paraded about stark naked and lived in ways resembling the hippies of the 1960s, were beyond the pale. Quakers, who professed no formal doctrine except silent meditation, might be possible just as threatening. After all, silent meditation could lead you anywhere including regicide. But the Quakers at least were quiet about it.

George Fox the founder of Quakerism had already provided one basis for containing fears of anarchy, by organizing local monthly meetings for worship within regional quarterly meetings; quarterly meetings, in turn, were within an overall framework of a yearly meeting. Occasional monthly meetings might develop a consensus for wild and antisocial behavior, indeed often did so, but would have to persuade the quarterly meetings whose members naturally outnumbered them. In extreme cases, the whole religion assembled in a yearly meeting. The innate conservatism of the meek would usually silence the extremism of the rebellious few. Very few kings would deny they could go no further toward despotism themselves, without the public behind them. The Quaker problem was to demonstrate what their consensus really was.

|

| Free Quaker Meeting House |

Essentially, the answer emerged that any religion which renounced a priesthood, which even renounced having a written doctrine, still needed some sort of institutional memory. If every Quaker began with a clean slate, to develop his own organized set of moral principles, then most of them would never get very far. Even if they did, they would have no time left for milking cows and weaving cloth. Single silent meditation was inefficient, particularly if you had faith that everyone was eventually going to arrive at the same convictions as the Sermon on the Mount. The founders of Quakerism took a chance, here. To assume the same outcome, you have to assume everyone starts with the same instincts and talents; even 21st Century America has private doubts about that one. Feudal England would have rejected it contemptuously. Carried to an extreme, it was a claim that everyone was as good a philosopher as Jesus of Nazareth, as good a person, as much a Son of God. That seemed like an arrogant claim. A more humble claim was that collectively, listening respectfully to one another in a gathered meeting, the whole world would over time reach the same truths as the Creator. If not, that still was as about as close as you were going to get to an oral memory, slowly building on the insights of the past.

Like all the early Quakers, Robert Barclay spent some time in jail. He did visit America in 1681, but it is doubtful if he spent any time here while he was Governor of East Jersey, from 1682 to 1688. The King insisted on his appointment, because he seemed the most reasonable man among the most reasonable sect of dissenters, and therefore the rebel he chose to deal with.

Regulation Precision: Not Entirely a Good Idea

Original Intent and the Miranda Decision

|

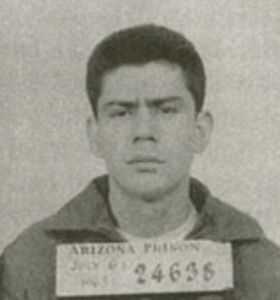

| Ernesto Arturo Miranda |

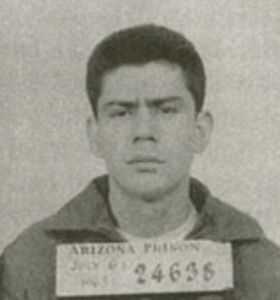

At the lunch table of the Franklin Inn Club recently, the Monday Morning Quarterbacks listened to a debate about Guantanamo Bay, prisoner torture and police brutality; all of which centered on the Supreme Court decision known as Miranda v Arizona. Ernesto Arturo Miranda was convicted without being warned of his right to remain silent, sentenced to 20 to 30 years in prison in 1966. Eventually, the U.S. Supreme Court, with Chief Justice Earl Warren writing a 5-4 decision, overturned the conviction, because Miranda had not been officially warned of his right to remain silent. The case was retried and Miranda was convicted and imprisoned on the basis of other evidence that included no confession.

An important fact about this case was that Congress soon wrote legislation making the reading of "Miranda Rights" unnecessary, but the Supreme Court then declared in the Dickerson case that Congress had no right to overturn a Constitutional right. Some of the subsequent fury about the Miranda case concerned the legal box it came in, with empowering the Supreme Court to create a new right that is not found in the written Constitution. Worse still, declaring it was not even subject to any other challenge by the other branches of government. In the view of some, this was a judicial power grab in a class with Marbury v Madison.

Several lawyers were at the lunch table on Camac Street, seemingly in agreement that Miranda was a good thing because the core of it was not to forbid unwarned interrogation, but rather a desirable refinement of court procedure to prohibit the introduction of such evidence into a trial. The lawyers pointed out the majority of criminal cases simply skirt this sort of evidence, use other sorts of evidence, and the criminals are routinely sent or not sent to jail without much influence from the Miranda issue. Indeed, Miranda himself was subsequently imprisoned on the basis of evidence which excluded his confession. What's all the fuss about?

And then, the agitated non-lawyers at the lunch table proceeded to display how deeper issues have overtaken this little rule of procedure. This Miranda principle prevents police brutality. Answer: It does not; it only prevents the use of testimony obtained by brutality from being introduced at trial. Secondly, Miranda contains an exception for issues of immediate public safety. Answer: What difference does that make, as long as the authorities refrain from using the confession in court? The chances are good that a person visibly endangering public safety is going to be punished without a confession. Further, the detailed procedures within Miranda encourage fugitives to discard evidence before they are officially arrested in a prescribed way. Answer: If the police officer sees guns or illicit drugs being thrown on the ground, do you think he needs a confession? Well, what about Guantanamo Bay? Answer: What about it? We understand the prisoners are there mainly to obtain information about the conspiracy abroad and to keep them from rejoining it. The alternative would likely be their execution, either by our capturing troops or by vengeful co-conspirators they had incriminated.

Somehow, this cross-fire seemed unsatisfying. The Miranda decision was made by a 5-4 majority, meaning a switch of a single vote would have reversed the outcome. The private discussions of the justices are secret, but it seems likely that some Justices were swayed by this edict viewed as a simple improvement in court procedure rather than a constitutional upheaval; Justices with that viewpoint feel they know the original intent and approve of it. Others are apprehensive the decision has already migrated from the original intent, in an alarming way. Everyone who watches much crime television and even many police officials feels that Miranda intends for all suspects to be tried on the basis of total isolation from interrogation from start to finish. More reasoned observers are alarmed that the process of discrediting all interrogation will lead to an ongoing disregard of the opinion of lawyers about court procedure, essentially the process of allowing public misunderstanding to overturn legal standards. Chief Justice William Renquist, no less, poured gasoline on this anxiety by declaring that Miranda has "become part of our culture".

What seems to be on display is the mechanism by which Constitutional interpretation drifts from the original intent. Not so much a matter of "Judicial Activism" which is "legislating from the bench", it is becoming a matter of non-lawyers confusing and stirring up the crowds until the Justices simply give up the argument. Drift is one thing; virtual bonfires and virtual torch-light parades are quite another.

Principles of the Command of War

|

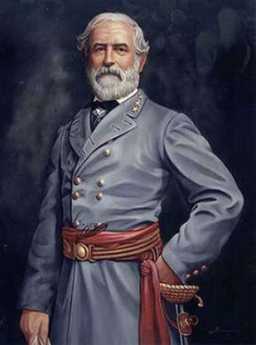

| General Robert E. Lee |

COMPUTER war games now consume a great deal of time which teenaged boys ought to be spending on homework, but study of the underlying principles of commanding an army is a major part of the serious curriculum at West Point. To be good in this course means promotion in the officer corps, and putting principles into successful action creates a few famous heroes for the history books. Lawrence E. Swesey, the founder and president of American Military Heritage Experiences, recently fascinated the Right Angle Club with an analysis of Generals Lee and Grant, both West Point graduates, putting the principles to work in the Civil War. Conclusion: Grant was the better general. It was Henry Adams, the manic-depressive historian, who diverted attention away from the principles of war, to such issues as Grant's short stature, taste for whiskey, and poor performance as an Ohio farmer. To be snide about it, a case can be made that Grant was a better writer of history than Adams, as well as a better general than Lee. However, probably neither Ulysses Grant nor Julius Caesar would be considered great historians if either had lost the wars they so famously described.

|

| Carl von Clausewitz |

The nine principles of war used by the American military derive from the Prussian officer Carl von Clausewitz, and are also used by the British, who add Flexibility as a tenth. The Chinese and Russians go on to add Annihilation to their list, a thought worth pondering. French military schools base their training on 115 principles laid out by Napoleon, which are presumably ten times more difficult to remember in the heat of battle if indeed anyone can remember anything when the enemy starts taking aim. In any event, the first universally agreed principle is to have an objective that is both effective and achievable. The second is deciding to take offensive or defensive action, the third is to apply sufficient combat mass at one critical time and place. Maneuver is the last of the four main principles. The economy of force, Unity of command, Surprise, Security, and Simplicity are five lesser principles, used to achieve the four main ones. Using these lines on the scorecard, how did Robert E. Lee and Ulysses S. Grant score on the fifteen major battles of the Civil War?

To compare the two generals, there is the difficulty that they only opposed each other in five battles, and each was the clear winner in two of them. In the battles where they faced other generals, both Lee and Grant won some, mainly because the opposing general did the wrong thing and lost the battle. The score comes out roughly even and gets complicated when giving more weight to winning bigger battles, less weight to smaller ones. Lee gets more glamor, because his style was to attack, even attacking just after he seemed to be losing. Lee won one battle when he was outnumbered, two to one.

But Grant won the war, and that should count for something. Both Lee and Grant knew the superior resources of the North would ultimately overcome the South unless the North made some mistakes, or just got tired and quit. Apparently Lincoln also understood this, and immediately offered the top job to Grant after an interview in which Grant came right out and said so.

Apparently, there is one more principle of war that isn't on the list. You play the hand you are dealt, after counting your cards carefully. In contract bridge, the game is always played with fifty-two cards. It isn't that simple in a war, where you stack the deck if you possibly can.

Recalling the Names of Acquaintances

As age creeps up on us, just about everyone has a little trouble recalling the name of old acquaintances, particularly if they come upon us unexpectedly. Reactions to this affliction vary tremendously, with some folks concluding that Alzheimer's Disease is surely here already, so they run away and hide. Other seniors are more self-confident and unashamedly go ahead with little strategies to cope with this problem. When you sort things out, you tend to find that shy and retiring people withdraw from social contacts because of self-consciousness, whereas bluff, bold extroverts plunge ahead with strategies they have devised to cope with matters. Neither one of them can remember a lot of names. The extroverts are usually very happy to share their secrets; a whole social group can be transformed by one loud, happy, unashamed coper sharing his techniques.

The late Doctor Francis C. Wood, formerly Professor of Medicine at the University of Pennsylvania after whom a number of professorships, institutes, and lectures are named, once made a little speech at a reception held at the College of Physicians of Philadelphia. "When you see somebody at a party whose name you ought to recall but can't, just stride right up to him with your hand held out. Just say your name like a gentleman, like 'Fran Wood," and he will invariably grab your hand gratefully and tell you his name, like 'Jim Jones, Fran. How are you?' After that, you'll get along just fine."

This isn't a medical matter at all. It's a matter of self-confidence and learned techniques. Dick Maas, who is a resident of the Beaumont CCRC in Bryn Mawr PA, was recently proud to describe his own technique in the Beaumont News as follows:

"Let's start with the obvious conclusion that one can't remember something one didn't hear in the first place. Introductions often are given in a social situation, which usually means one is surrounded by loud noise. Furthermore, the person doing the introduction may have a soft voice or poor diction.

There's hope, however. One can employ a little trick that's socially correct and psychologically useful: Shake hands with your new acquaintance! Hang on until you hear the name clearly and correctly. If necessary, hang on and say, 'I didn't get your name. Would you please repeat it?' Even ask for a spelling if need be. Don't release the hand until you've heard it correctly and repeated it back."

|

| Handshake |

It isn't always necessary to use a name or shake hands. In Haddonfield where I live, the custom grew up years ago that everybody says, "Hello" or "Good Morning" (Good Morning preferred) to everybody one meets on the street -- before lunch. There's no hesitation about it, that other person is going to say "Good Morning," so you might as well get ready for it. Of course, it's true that some people don't know what to do with themselves, and retreat back into their shells, awkwardly trying to pass you by without acknowledging your greeting. You can either shrug your shoulders and assume that repetition will gradually bring that newcomer around in a few days or weeks, or else you can refuse to let them do that to you. Step in his way and say it louder. To get away with this you have to be prepared to say something about the weather or your dog or the morning news, which is a pleasant way of saying you weren't belligerent about it, just showing them the way things are done in Haddonfield. Pass yourself off as uncontrollably extroverted. And be sure to smile. This sort of custom is a matter of population density. Up in Alaska a trapper who passed within a mile of another trapper's cabin without saying, "Howdy" was inviting a knife fight about the insult. In New York's Times Square, by contrast, a pedestrian is within walking distance of two hundred thousand people; greeting everybody is an impossibility. Which leads me to a personal story.

At the Pennsylvania Hospital we had an orderly named Sam who was deeply religious and went around handing out religious tracts. He was pleasant and did a lot of work, so no one interfered. One day, I was walking in Times Square with my wife on one arm and my mother on the other. Looking ahead, I could see a crowd had formed around an orator on a soapbox, who was none other than Sam. I said nothing, but as we drew closer, my mother exclaimed about it and maneuvered the three of us up to the ringside to see the excitement. Sam stopped talking to the crowd, and shouted, "Hello, Doctor Fisher". So, of course, I replied, "Hello, Sam"; and the three of us kept walking down the street. After we had gone two blocks in puzzled silence, my mother abruptly stopped walking, stamped her foot, and said, "All right young man. Just who is this Sam?"

Politics of Employer Hiring Preferences

|

| Interview |

To a remarkable degree, employees tend to remain in either the nonprofit or for-profit sectors of the economy for a lifetime. A prospective new employer naturally wants to know about previous work experience so it is natural enough to poke around for clues. An interviewer may just be jumping around when most questions are fired at a prospective new hire, but this one is hard to dodge.

Whether it is a reasonable position or not, almost everyone on both sides of the interview desk has the impression that for-profit employers prefer to hire people with experience in the for-profit sector, and the reverse is true for non-profits. So far, so good; experience in related fields is an attractive feature. But going considerably beyond favoring prospective employees with related experience, there is a general impression that experience in the other sector has a curse attached, reducing the chances of being hired. When an impression is this widespread, it no longer matters whether it is sensible. Make the wrong answer, and you don't get the job; that's reason enough. And it's equally true in both sectors, so the workforce segregates pretty quickly.

It's plainly true that the for-profit sector votes Republican, the non-profit sector registers, votes and talks pro-Democrat. Since young people usually vote for Democratic candidates, it seems to follow that party-switching is mainly in the direction of young Democrats becoming Republican after a few years of employment, although the reverse is seen when young residents of farm communities move to the city, with college sandwiched in-between.

Going to college may not be exactly like being employed by a college, but it's close enough. The faculty are in a similar power relationship to students as a boss is to employees, in command, but also portraying a role model and parent-figure; it is axiomatic that students emerge from college more liberal-leaning than they entered. It's beyond challenge that college faculties are the most liberal-leaning group in America; their institutional employers are all in the non-profit sector to some degree. As long as government research grants, scholarship grants, construction subsidies (and indirect overhead allowances) continue to dominate the finances of higher education, the allegiance of all colleges and universities will belong to the party so proudly representative of non-profit principles. Professor Gordon S. Wood of Brown University has propounded the theory that the 18th Century concept of a gentleman had three distinctive features: he didn't need to work, he deplored aggressive money-making, and he went to college. Americans dislike the concept of the aristocracy, but they strongly favor playing the role of a gentleman. It's as good an explanation as any.

Future trends are of course hard to predict, but since the proportion of the population going to college is steadily increasing, there is a strong force in the direction of continuing to strengthen the Democratic party. But since taxes derived from the for-profit sector are the ultimate source of all non-profit revenue, some strong push-back from present trends also seems inevitable.

Three Threes

|

| A Prisoner of the Stone |

Thank you, Mr. President. Tonight I'm to discuss Right Angle Club's odd contribution to book publishing, with emphasis on history books. To make it easier to follow, I title this speech Three Threes, for three meanings, in three categories each. We touch -- in threes -- on 1) book technology, 2) variable proportions of facts and opinion, and 3) pitfalls to success. Times three, makes nine bases to touch, so please understand if I must hustle.

First, let's deconstruct the book in front of you. Ten years ago, I began taking notes at our Friday lunches, writing reviews of our speakers. The younger generation calls it "blogging" when you then broadcast such essays on the Internet; that's how I started the journey, giving it the title of Philadelphia Reflections. The blogs concentrate on the Philadelphia "Scene" because that's what our speakers usually do. Our club has met since 1922, so by rights, it might contain three thousand blogs, but unfortunately, I arrived late, so we only have 400. Call them 400 beads in a bowl, subdivided into ten annual bowls of beads.

After a while themes emerged, so if I picked similar beads from the combined bowl of four hundred, then groups of similar beads become necklaces. Selected necklaces can be artfully arranged into a rope of necklaces, which is to say, a book about Philadelphia, from William Penn to Grace Kelly. Thus without realizing it, I had been writing quite a big book without foreseeing where it would go. I described this curiosity to my computer-savvy son, who wrote a computer program to make it simple for anyone to rearrange such material. Simple, that is, for an author to wander among his own random thoughts, and only in retrospect extract the books. Many authors, I now believe, only imagine they sit down to write a book from start to finish, eventually discovering one was already there, waiting to emerge. Because it wasn't planned, many of them must then rewrite a coherent book after they finally realize what it was about. Since I am also in the publishing business in a small way, I was able to guide my son into automating the subsequent steps of publishing so any author could perform them without a publisher. That makes book authorship resemble fine art; the artist does it all except marketing. This program can now aspire to eliminate the whole old-fashioned assembly line of publishing, which is in the process of dying anyway. So here's the first cluster of threes -- blogs re-assembled into chapters, chapters re-assembled into books, with copy editing, spell checking and book design mostly performed by the computer.

* * * In addition to technology levels, historical literature can also be seen as dividing into three layers of fact mingled with interpretation: starting with primary sources, which are documents allegedly describing pure facts. Scholars pore over such documents and comment on them, usually in scholarly books, called secondary sources . Unfortunately, many publishers reject anything unlikely to sell very well, however essential to the advancement of academic careers. Eventually, authors of history focus on the general reading public and generate tertiary sources, sometimes textbooks, sometimes "popularized" history in rising levels of distinction. There you have the traditional three levels of historical writing.

A German professor named Leopold von Ranke formalized this hierarchy about 1870, thereby vastly improving the quality of history in circulation by insisting that nothing could be accepted as true unless based on primary documents. Von Ranke in fact did transform Nineteenth-century history from the opinionated propaganda in which it had largely declined, into a renewed science. At its best, it aspired to return to Thucydides, with footnotes. Unfortunately, Ranke also encased historians in a priesthood, worshipping piles of documents largely inaccessible to the public, often discouraging anyone without a PhD. from hazarding an opinion. The extra cost of printing twenty or thirty pages of bibliography per book is now a cost which modern publishing can ill afford, making modern scholarship a heavier task for the average graduate student because the depth of his scholarship is measured by the number of citations, incidentally "turning off" the public about history. All that seems quite unnecessary since primary source links could be provided independently (and to everyone) on the Internet at negligible cost. And supplied not merely to the scholar, but to any interested reader, providing enjoyable rare documents at home, no need to labor through citations to get at documents in a locked archive.

The general history reader now must remain content with tertiary overviews, and a few brilliant secondary ones, because document fragility bars public access to primary papers; but many might enjoy reading primary sources if they were physically more available. The general reader also needs lists of "suggested reading", instead of the "garlands of bids", as one wit describes bibliography. If you glance through this evening's presentation book, you see I have begun to include separate internet links to both secondary as well as primary sources, because the bibliographies of the secondaries lead to the primaries. Unfortunately, you must fire up a computer to access these treasures. The day fast approaches when every scholar can carry a portable computer with two screens, one displays the historian's commentary while the second screen displays related source documents. It seems likely that history on paper will persist in some form because it is cheaper, but also because e-books make it hard to jump around. Even newspapers are encountering this obstacle. E-books are sweeping the field in books of fiction because fiction is linear. But non-fiction wanders around, even though the distinction is often unappreciated by computer designers.

* * * A third and final group of threes must confess some traps and dangers of history writing. First, on a technical level. I linked primary documents already on the Internet to the appropriate commentary within my blog. In those early days of enthusiasm, volunteers were eager to post source material into the ether, just asking for someone to read it. I had linked up Philadelphia Reflections to nearly a thousand citations when the invincible flaw exposed itself. Historians were eager to post source documents, but not so eager to maintain them. One link after another was dropped by its author, producing a broken link for everyone else. The Internet tried to locate something which wasn't there, slowing the postings to a pitiful speed. Reluctantly, I went through my web site, removing broken links and removing most links. Maybe linking was a good idea, but it didn't work. There is thus no choice but to look for institutional repositories for historical linkage, and the funding to pay for maintaining it. It is not feasible to free-load, although only recently it seemed to be.

This first pratfall assumed technology would provide more short-cuts than in fact it would. A second warning attaches to a quotation from Machiavelli, ordinarily remembered as a schemer, not a philosopher.

There is nothing more difficult to take in hand, more perilous to conduct, or more uncertain in its success than to take the lead in the introduction of a new order of things.That's often for the best. But regardless of whether ideas are good or bad, new ones all jump through the same hoop, so earnest virtue does not always triumph. Von Ranke was not a Scot, but he showed us how Many a Mickle -- Makes a Muckle. Machiavelli showed us that hard work alone does not always succeed.

But as a third and concluding point, it was Michelangelo who put his finger right on the final crux of history: The sculptor made the wry comment that sculpting is easy, just carve away the stone you don't want. It is a great fallacy to assume that ancient history can be isolated from current politics, or even to believe that history teaches the present. It is just the other way around. Just as Michelangelo felt statues were "prisoners of the stone" from which it was carved, insights and generalizations of history emerge from a huge mass of unsorted primary documents. The process of writing history is one of discarding documents which fail to support a certain conclusion, often those which send inconvenient messages to modern politics. Carried too far, de-selecting inconvenient documentary sources amounts to destroying alternative viewpoints. What the author chooses to discard, is then more important than what he chooses to include. Unless other linkages are consciously maintained, they soon enough disappear by themselves.

aldf'lkdf'adjf'sjf'ajfiwafajiivji ai'aiak lkfadfkldklflkfa;sdklfa oawieuiwefaafwfafaw aoijfoaijfiwjfiawjfwafj' ija'eirjaijfaifv aegameaaraegaefjp9w3 ijroioafoiaiaf eoir;eorieoheoihoeiheoihoeieoi aeijaerijiaejaiejaegjaegjaeg eiorgoaeirjgariegj409u034uu034u3943999

34239-93494949 2u 89 98u u23893949 4u0394-4u q09r-q9rq9r93i q4903q-9q-9q9q903q3 4-3q-9-q38-q8q3 3333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333333

Revenue Stream for Historical Documents

|

| The Economist |

1.-2. Save the cost of publishing long bibliographies in every copy of a book whose readers mostly make no use of the bibliography, while still making the bibliography available to those who will use it. The Economist magazine now does this in the form of notifying the reader that the source documents for their articles are available on the Economist web site. This is a suitable methodology for publications with only limited bibliographies, but very large circulation and short shelf life. Essentially, it is a free service to readers which reduces the clutter and intimidation of citations to essentially unavailable sources.

|

| Kindle |

On the other hand, a recent book about Thomas Jefferson had 120 pages of bibliography citations. Unless that book has an unusually scholarly readership, most of the cost of printing and distributing 120 pages were wasted. Printing that book without the citations, but also publishing a diskette, Kindle, or website -- containing nothing but citations --would produce considerable savings for the publisher and reader, and they ought to be willing to pay for it. Unfortunately, there is resistance to anything new, and you may have to do it both ways until the idea catches on. The cost should include the right to some recognizable copy mark on the book, signifying this feature is available. Bowker and advertisers should be encouraged to use something smaller but similar. In that way, the concept can be advertised in advance of actual market penetration.

Some thought should be given to making some use of the searchability of such a bibliography. At the negligible cost, it can be resorted and listed in a wide variety of options(author, date, publication source), since the incremental cost of such additional material is minimal. For example, identifying all the citations available at one location should assist the scholar in deciding where to pursue his work. Perhaps there are ways to produce it which would help the librarian locate the material within the library or to pick out material in the same location before moving on.

|

| ABE Books |

3. Widen the availability of the text of primary sources. Much of this would be of interest to the non-professional reader if he could get to it more easily, and it would enlarge his sense of participating in the interpretation or "buy in". Unfortunately, most photocopying is still of poor quality, and the most useful version of the original is to use a keyboard. Therefore, I recommend searching for ways to induce the scholar to do it for you; if he really thinks it is an important document, would he please keyboard it for everyone else. If a way is provided for counting the number of "hits" on a document's citations, it will lead you to the popular documents, to begin with. Please don't try to start with "A" and end with "Z". After you have produced digitized copy, then photocopy it if you wish. The local Athenaeum makes quite a lot of revenue from selling reprints of architectural drawings, so there are exceptions.

4. Do not limit yourself to primary sources. There are copyright issues here, but a link to Amazon will get you revenue from Google, and a used copy of a book from ABEbooks will be delivered to your home by United Parcel Service. It's often cheaper than parking near a library.

REFERENCES

| Thomas Jefferson: The Art of Power: Jon Meacham: ISBN: 978-1400067664 | Amazon |

| Posted by: Burchard | Sep 6, 2011 7:22 PM |

12 Blogs

Methods of Thought: Scientific, Logical, Future

Ideas migrate slowly like an army of ants, but methods of thinking jump like grasshoppers, every few centuries.

Ideas migrate slowly like an army of ants, but methods of thinking jump like grasshoppers, every few centuries.

Rise and Fall of Books

The The Director of America's first library sees books as mainly a 19th Century phenomenon.

The The Director of America's first library sees books as mainly a 19th Century phenomenon.

Many Mickles in One Muckle

If bigger chips get too hot for a computer, engineers just add more chips instead of making bigger ones.

If bigger chips get too hot for a computer, engineers just add more chips instead of making bigger ones.

Socratic Teaching

Drink that hemlock right now, or I'll pour it down your throat.

Drink that hemlock right now, or I'll pour it down your throat.

Robert Barclay Justifies Quaker Meetings

Robert Barclay, one of the handfuls of English philosophers of enduring note, came close to establishing the doctrines of the Quaker Church, a religion with no formal doctrine.

Robert Barclay, one of the handfuls of English philosophers of enduring note, came close to establishing the doctrines of the Quaker Church, a religion with no formal doctrine.

Regulation Precision: Not Entirely a Good Idea

Original Intent and the Miranda Decision

Principles of the Command of War

Recalling the Names of Acquaintances

Politics of Employer Hiring Preferences

Three Threes

Revenue Stream for Historical Documents

Right before our eyes, we can watch the Miranda decision migrate away from the original intent.

Right before our eyes, we can watch the Miranda decision migrate away from the original intent.

A soldier must know how to shoot a gun, but a general must know how to use an army.

A soldier must know how to shoot a gun, but a general must know how to use an army.

As we get older, the speed of recall seems to slow down for most people, leading to a problem remembering names. A number of senior citizens have figured out ways to cope.

As we get older, the speed of recall seems to slow down for most people, leading to a problem remembering names. A number of senior citizens have figured out ways to cope.

The for-profit or nonprofit nature of your previous employer affects your chances of being hired by a new boss.

The for-profit or nonprofit nature of your previous employer affects your chances of being hired by a new boss.

Book publishing is becoming an electronic world, and Philadelphia could regain dominance of it if the city sees itself as a model of how to balance private gain with public benefit.

Book publishing is becoming an electronic world, and Philadelphia could regain dominance of it if the city sees itself as a model of how to balance private gain with public benefit.

New blog 2013-01-10 17:45:38 description

New blog 2013-01-10 17:45:38 description